Extreme Scale computing is marked by multiple-levels of hierarchy and heterogeneity ranging from the compute units to storage devices to the network interconnects. Owing to the plethora of heterogeneous communication paths with different cost models expected to be present in extreme scale systems, data movement is seen as the soul of different challenges for exascale computing. On the other hand, advances in networking technologies such as NoCs (like NVLink), RDMA enabled networks and the like are constantly pushing the envelope of research in the field of novel communication and computing architectures for extreme scale computing. The goal of this workshop is to bring together researchers and software/hardware designers from both academy and industry who are involved in creating network-based computing solutions for extreme scale architectures, to share their experiences and to learn the opportunities and challenges in designing next-generation HPC systems and applications.

ExaComm welcomes original submissions in a range of areas, including but not limited to:

- Scalable communication protocols

- High performance networks

- Runtime/middleware designs

- Impact of high performance networks on Deep Learning / Machine Learning

- Impact of high performance networks on Big Data

- Novel hardware/software co-design

- High performance communication solutions for accelerator based computing

- Power-aware techniques and designs

- Performance evaluations

- Quality of Service (QoS)

- Resource virtualization and SR-IOV

Workshop Program | |

9:00 - 9:15 |

Opening Remarks |

9:15 - 10:15 |

Keynote: Exascale Computing Architecture Trends and Implications for Programming Systems Slide 1, Slide 2 Speaker: John M. Shalf, CTO, National Energy Research Scientific Computing Center and Department Head for Computer Science and Data Sciences at Lawrence Berkeley National Laboratory (LBNL) Abstract: For the past twenty-five years, a single model of parallel programming (largely bulk-synchronous MPI), has for the most part been sufficient to permit translation of this into reasonable parallel programs for more complex applications. In 2004, however, a confluence of events changed forever the architectural landscape that underpinned our current assumptions about what to optimize for when we design new algorithms and applications. We have been taught to prioritize and conserve things that were valuable 20 years ago, but the new technology trends have inverted the value of our former optimization targets. The time has come to examine the end result of our extrapolated design trends and use them as a guide to re-prioritize what resources to conserve in order to derive performance for future applications. This talk will describe the challenges of programming future computing systems. It will then provide some highlights from the search for durable programming abstractions more closely track emerging computer technology trends so that when we convert our codes over, they will last through the next decade. |

10:15 - 11:00 |

Invited Talk 1: Paving the road to Exascale Slides Speaker: Dror Goldenberg, Vice President - Architecture, Mellanox Technologies Abstract: The exponential growth in data and the ever growing demand for higher performance to serve the requirements of the leading scientific applications, drive the need for higher scale system and the ability to connect tens-of-thousands of heterogeneous compute nodes in a very fast and efficient way. The interconnect has become the enabler of data and the enabler of efficient simulations. Beyond throughput and latency, the interconnect needs be able to offload the processing units from the communications work in order to deliver the desired efficiency and scalability. 100Gb/s solutions have already been demonstrated, and new large scale topologies are being discussed. The session will review the need for speed, new usage models and how the interconnect can play a major role in enabling applications scalability. |

11:00 - 11:30 |

Coffee Break |

11:30 - 12:15 |

Invited Talk 2: Introspection for Exascale Communication: Needs, Opportunities, Tools and Interfaces Slides Speaker: Martin Schulz, Computer Scientist, Lawrence Livermore National Laboratory Abstract:

The rising complexity of communication architectures coupled with new system

and application demands, creates new performance and optimization challenges.

This includes issues like node mapping strategies and contention avoiding message

scheduling, but also goes all the way to power and resilience concerns. Current

network architectures, however, provide very little introspection capabilities that

help system and application developers detect and mitigate such issues.

|

12:15 - 1:00 |

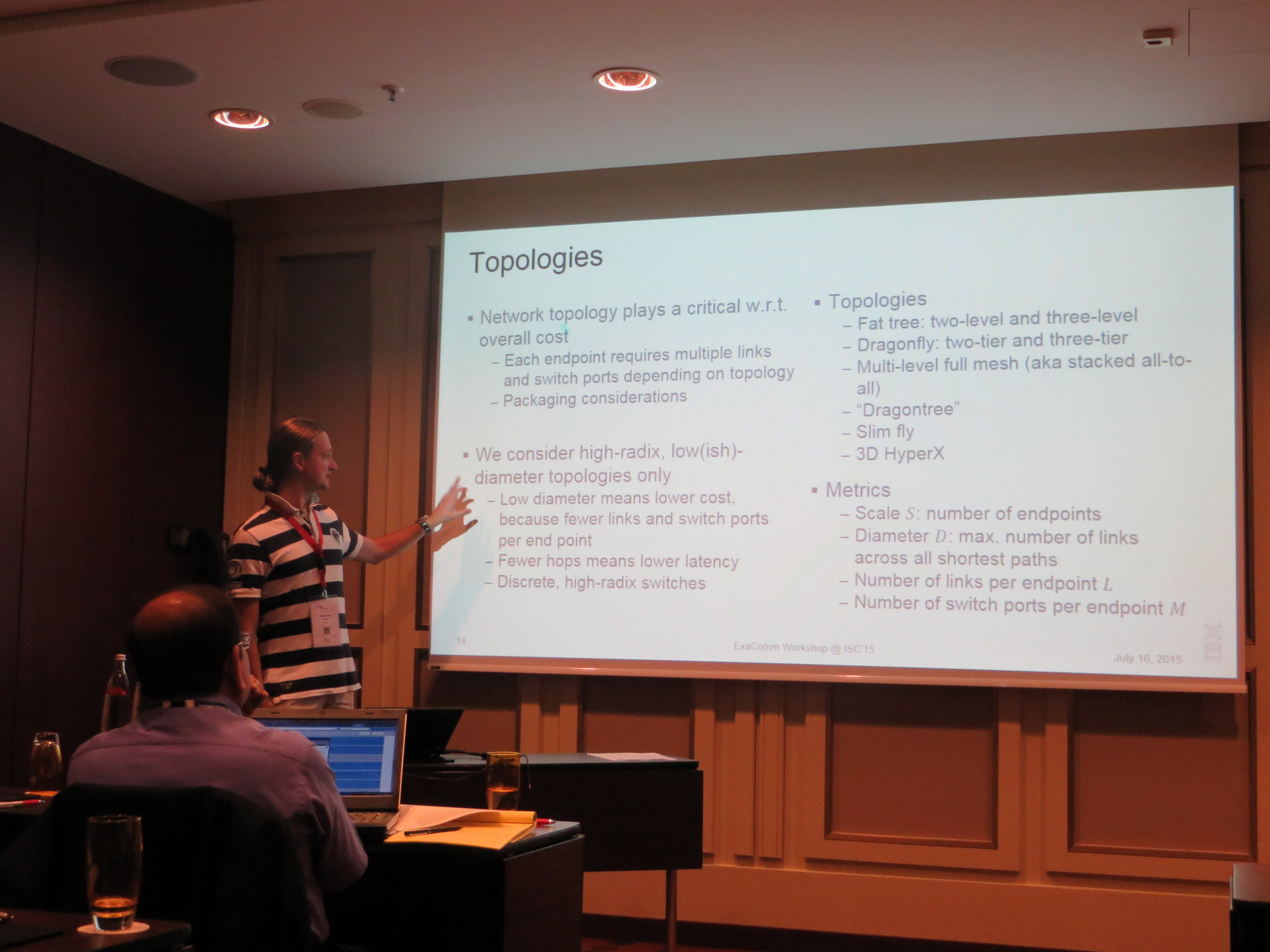

Invited Talk 3: Exploring Exascale Interconnection Network Topology Options Slides Speaker: Cyriel Minkenberg, Research Staff Member, IBM Zurich Research Laboratory, Zurich, Switzerland Abstract: Interconnection networks for (pre-)exascale HPC systems face a number of obstacles, among which scale, power, performance, and cost feature prominently. In this talk, I'll touch upon these challenges, and argue that at present, more than anything else, the overall network cost is the limiting factor. To some extent, this limitation is mitigated by increasing node "fatness" brought about by the growing use of accelerators to drastically increase per-node compute capacity, which in turn implies a reduced number of network endpoints. This trend opens up opportunities for significant cost and latency reductions by employing low-diameter networks. I will review several promising low-diameter topologies that can achieve the desired scale with moderate-radix switches, and discuss their relative merits and drawbacks. Despite these potential optimizations, the byte/FLOP ratio of these systems is expected to be substantially lower than that of today's systems, which will require a more fundamental rethinking of the balance of communication and computation in systems of such scale. |

1:00 - 2:00 |

Lunch Break |

2:00 - 2:45 |

Invited Talk 4: Interconnect Related Research at Oak Ridge National Laboratory Slides Speaker: Arthur (Barney) Maccabe, Division Director, Computer Science and Mathematics, Oak Ridge National Laboratory Abstract: Interconnect performance is not tracking the projections needed to meet the Exascale targets identified in the DOE workshop that was held in San Diego, California in December of 2009. Staff in the Computer Science and Mathematics Division at Oak Ridge National Laboratory are engaged in a wide range of research projects that address the challenges associated with interconnect performance, including: characterization of an application.s use of the interconnect, programming models and runtimes (Open MPI and OpenSHMEM), abstract interfaces (UCCS/UCX), operating systems (Hobbes), on-.node interconnects and memory systems (Oxbow), and I/O networks. As we consider an integration of the understanding that we have gained from these projects, we are starting to explore the possibility of a uniform abstraction that addresses the multiple levels of interconnects (intra-node, inter-node and inter-system), the transformation of data in transit, atomic operations on storage, and the possibility of data corruption. |

2:45 - 4:00 |

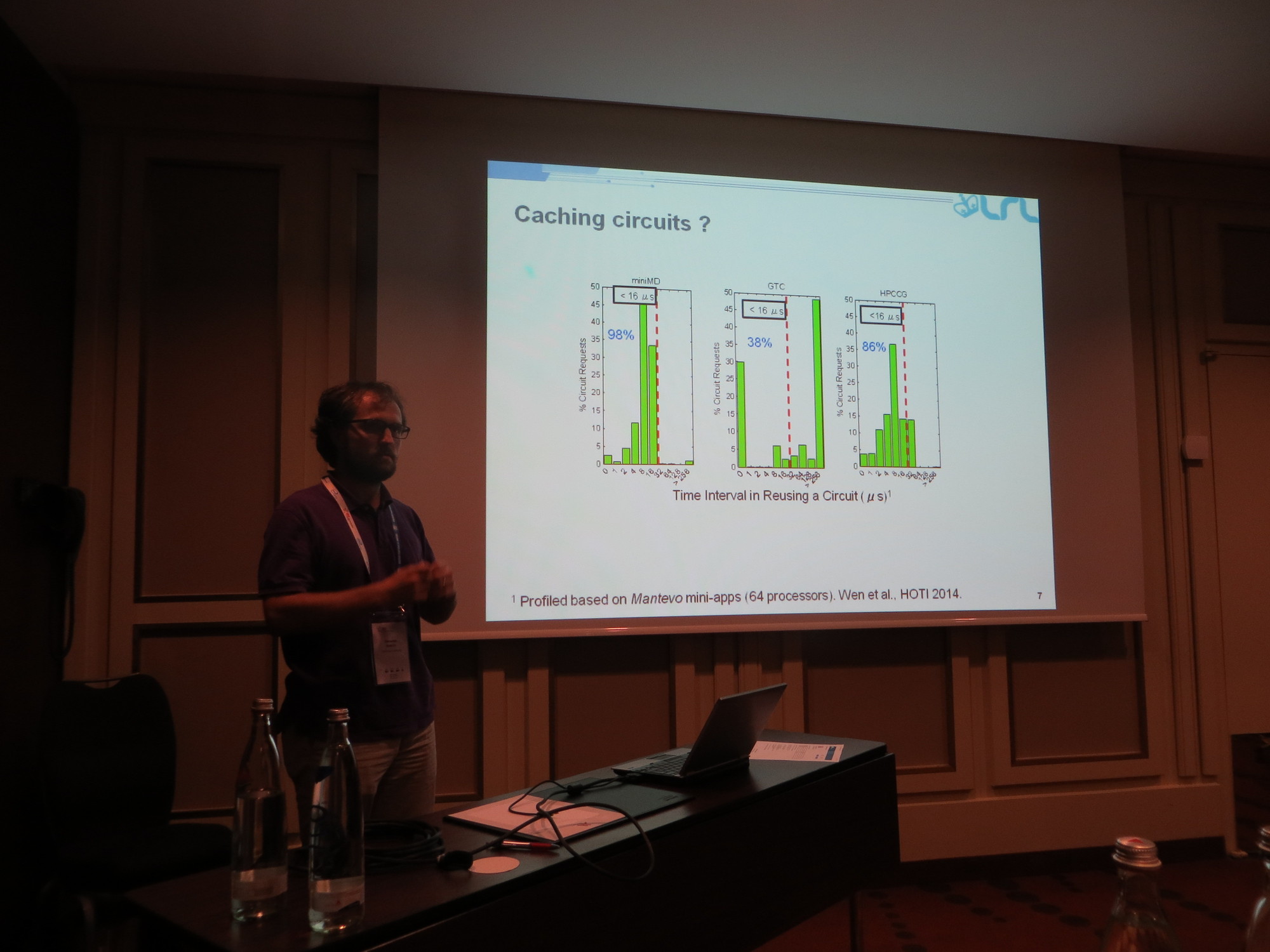

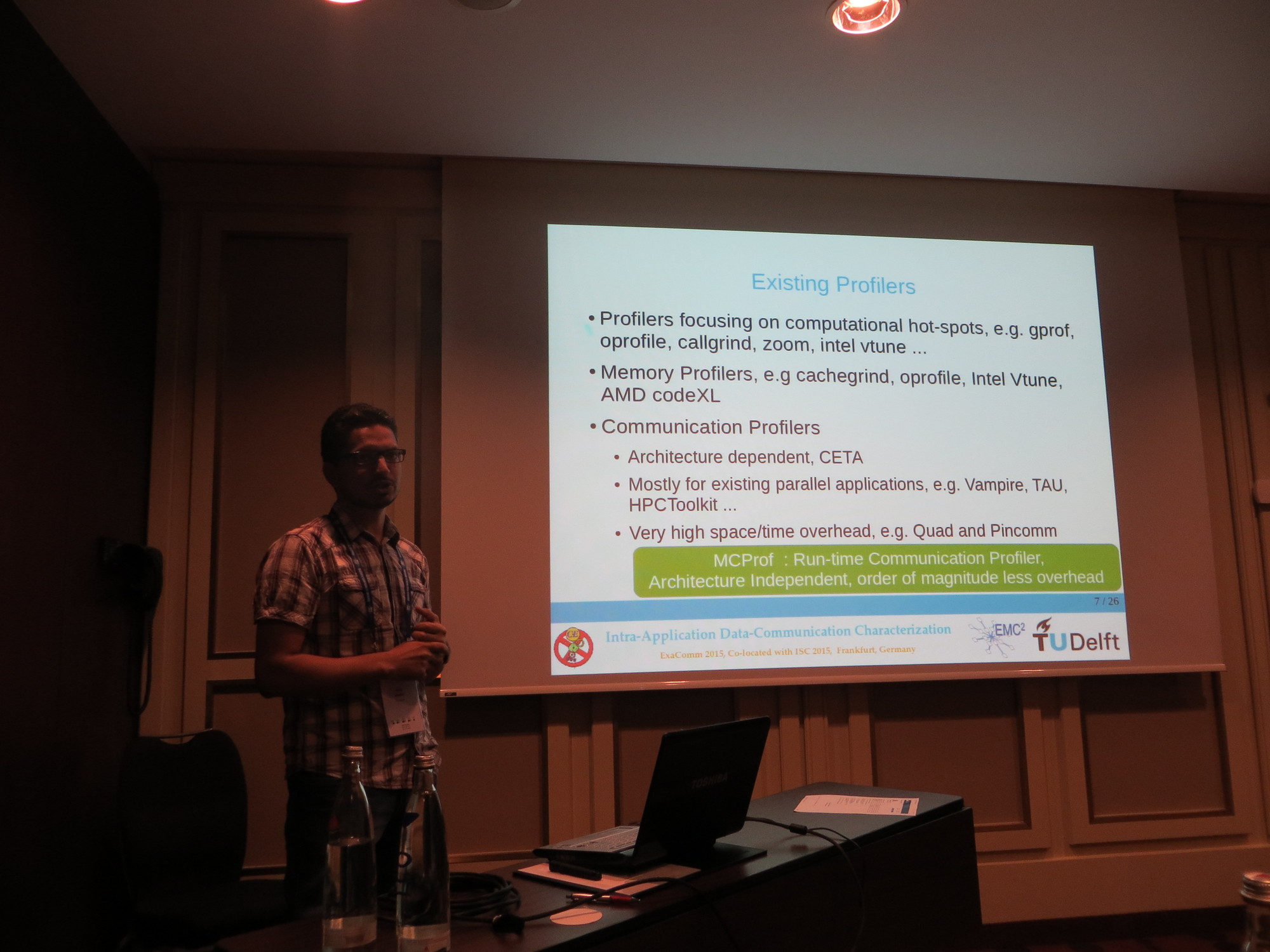

Research Paper Session Session Chair: Hari Subramoni Latency-avoiding Dynamic Optical Circuit Prefetching Using Application-specific Predictors, Ke Wen (Columbia University), Sebastien Rumley (Columbia University), Jeremiah Wilke and Keren Bergman (Columbia University) Intra-Application Data-Communication Characterization, Imran Ashraf, Vlad-Mihai Sima and Koen Bertels, Delft University of Technology "/static/media/mvapich/mvapich2-2.2-userguide.html" Paper, Slides |

4:00 - 4:30 |

Coffee Break |

4:30 - 6:00 |

Panel: Top 5 Challenges for Exascale Interconnects Panel Moderator : Ron Brightwell, Sandia National Laboratories Panel Members:

Slides coming soon! |

6:00 - 6:10 |

Closing Remarks |

Organizing Committee

Program Chairs

- Dhabaleswar K. (DK) Panda, The Ohio State University

- Hari Subramoni, The Ohio State University

- Khaled Hamidouche, The Ohio State University

Program Committee

- Taisuke Boku, University of Tsukuba, Japan

- Ron Brightwell, Sandia National Laboratories

- Luiz DeRose, Cray

- Jose Duato, Polytechnic University of Valencia, Spain

- Hans Eberle, NVIDIA

- Ada Gavrilovska, Georgia Tech

- Dror Goldenberg, Mellanox Technologies

- Torsten Hoefler, ETH Zürich, Zurich, Switzerland

- Hai Jin, Huazhong University of Science and Technology, Wuhan, China

- Cyriel Minkenberg, IBM Zurich Research Laboratory, Zurich, Switzerland

- Stephen W. Poole, United States Department of Defense

- Sebastien Rumley, Columbia University

- Martin Schulz, Lawrence Livermore National Laboratory

- John M. Shalf, National Energy Research Scientific Computing Center / Lawrence Berkeley National Laboratory

- Sayantan Sur, Intel