Extreme Scale computing in HPC, Big Data, Deep Learning and Clouds are marked by multiple-levels of hierarchy and heterogeneity ranging from the compute units (many-core CPUs, GPUs, APUs etc) to storage devices (NVMe, NVMe over Fabrics etc) to the network interconnects (InfiniBand, High-Speed Ethernet, Omni-Path etc). Owing to the plethora of heterogeneous communication paths with different cost models expected to be present in extreme scale systems, data movement is seen as the soul of different challenges for exascale computing. On the other hand, advances in networking technologies such as NoCs (like NVLink), RDMA enabled networks and the likes are constantly pushing the envelope of research in the field of novel communication and computing architectures for extreme scale computing. The goal of this workshop is to bring together researchers and software/hardware designers from academia, industry and national laboratories who are involved in creating network-based computing solutions for extreme scale architectures. The objectives of this workshop will be to share the experiences of the members of this community and to learn the opportunities and challenges in the design trends for exascale communication architectures.

ExaComm welcomes original submissions in a range of areas, including but not limited to:

- Scalable communication protocols

- High performance networks

- Runtime/middleware designs

- Impact of high performance networks on Deep Learning / Machine Learning

- Impact of high performance networks on Big Data

- Novel hardware/software co-design

- High performance communication solutions for accelerator based computing

- Power-aware techniques and designs

- Performance evaluations

- Quality of Service (QoS)

- Resource virtualization and SR-IOV

8:50 - 9:00

Opening Remarks

Hari Subramoni and Dhabaleswar K (DK) Panda

The Ohio State University

Abstract

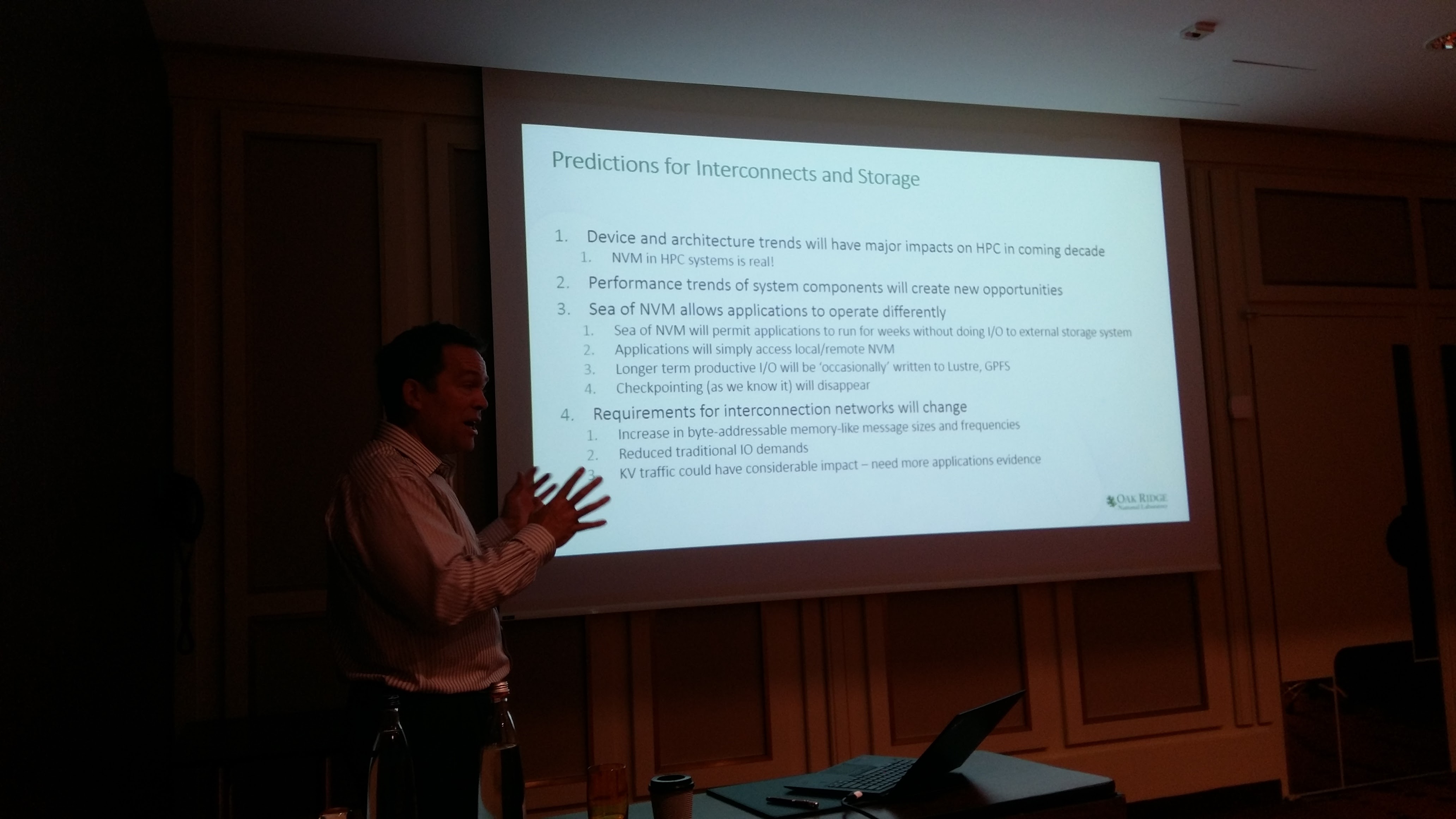

Concerns about energy-efficiency and cost are forcing our community to reexamine system architectures, and, specifically, the memory and storage hierarchy. While memory and storage technologies have remained relatively stable for nearly two decades, new architectural features, such as deep memory hierarchies, non-volatile memory (NVM), and near-memory processing, have emerged as possible solutions. However, these architectural changes will have a major impact on software systems and applications in our HPC ecosystem. Software will need to be redesigned to exploit these new capabilities. In this talk, I will sample these emerging memory technologies, discuss their architectural and software implications, and describe several new approaches to programming these systems. One system is Papyrus (Parallel Aggregate Persistent -yru- Storage); it is a programming system that aggregates NVM from across the system for use as application data structures, while providing performance portability across emerging NVM hierarchies.

Bio

Jeffrey Vetter, Ph.D., is a Distinguished R&D Staff Member at Oak Ridge National Laboratory (ORNL). At ORNL, Vetter is the founding group leader of the Future Technologies Group in the Computer Science and Mathematics Division. Vetter also holds joint appointments at the Georgia Institute of Technology and the University of Tennessee-Knoxville. Vetter earned his Ph.D. in Computer Science from the Georgia Institute of Technology. Vetter is a Senior Member of the IEEE, and a Distinguished Scientist Member of the ACM. In 2010, Vetter, as part of an interdisciplinary team from Georgia Tech, NYU, and ORNL, was awarded the ACM Gordon Bell Prize. Also, his work has won awards at major conferences including Best Paper Awards at the International Parallel and Distributed Processing Symposium (IPDPS) and EuroPar, Best Student Paper Finalist at SC14, and Best Presentation at EASC 2015. In 2015, Vetter served as the SC15 Technical Program Chair. His recent books, entitled "Contemporary High Performance Computing: From Petascale toward Exascale (Vols. 1 and 2)," survey the international landscape of HPC. See his website for more information: http://ft.ornl.gov/~vetter/.

Abstract

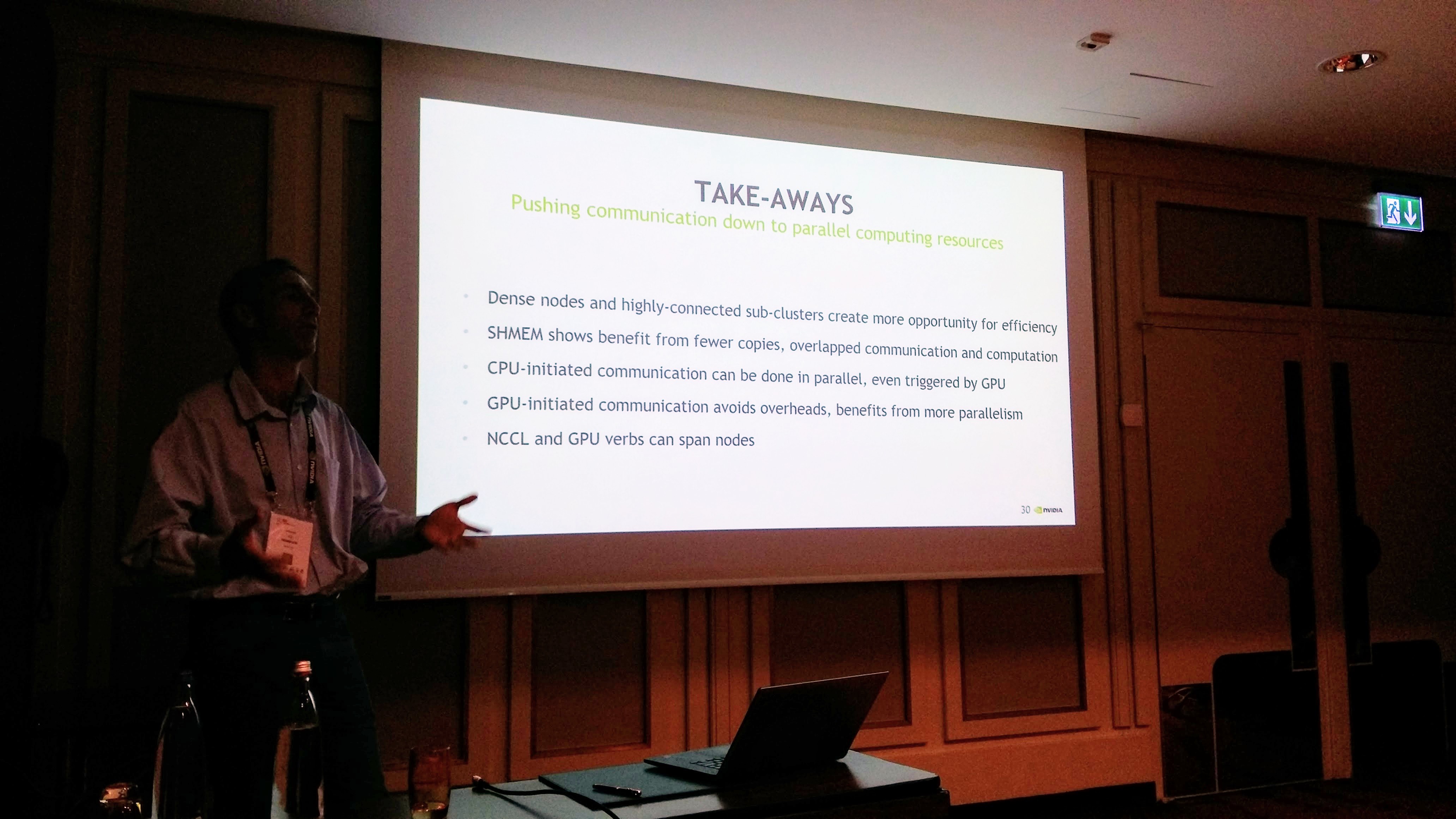

The most power-efficient and performant systems now include dense nodes that pack in several GPUs with CPUs, all connected to the network, storage and significant memory capacity. Such system present new opportunities and challenges. This talk shows an architectural path forward to scale, describes new and extended programming models and interfaces to achieve performance with greater ease on such systems, and shares early performance results that justify factoring codes a little differently and using these new interfaces. We demonstrate a transition path from pure MPI to a mix of MPI and SHMEM, highlight new GPU Direct functionality, and introduce a new infrastructure, HiHAT, which will enable more portable performance and help facilitate data movement within and among dense nodes. The relevance of interconnects will be highlighted in each of these cases.

Bio

Chris J. Newburn (CJ) is the Principal HPC Architect for NVIDIA Compute Software, with a special focus on systems and programming models for scale. He has contributed to a combination of hardware and software technologies over the last twenty years. He has a passion for architecting the richness of heterogeneous platforms so that they are easier to use and have lasting value. He has over 80 patents. We wrote a binary-optimizing, multi-grained parallelizing compiler as part of his Ph.D. at Carnegie Mellon University. Before grad school, in the 80s, he did stints at a couple of start-ups, working on a voice recognizer and a VLIW supercomputer. He's delighted to have worked on volume products that his Mom uses.

Abstract

In the near future, emerging applications such as big data analytics and neural network training are expected to be empowered by massively parallel in-memory processing technology derived from HPC. This talk will describe current challenges of the Tofu interconnect to accelerate big data analytics such as Graph500 benchmark, and discuss future challenges to deal with the rapid increase of system diversity after Moore's Law. As its first step, Fujitsu is developing a Deep Learning dedicated processor with a highly scalable interconnect.

Bio

Yuichiro Ajima is a computer system architect in Fujitsu and has worked with the Fujitsu’s supercomputer systems including the K computer for over 10 years. He has developed the Tofu interconnect series, and won the Ichimura Prize in Industry from the New Technology Development Foundation in 2012 and the Imperial Invention Prize from the Japan Institute of Invention and Innovation in 2014. He received the B.S. degree in Electrical Engineering, and the M.S. degree and Ph.D. in Information Engineering from the University of Tokyo in 1997, 1999, and 2002, respectively.

11:00 - 11:30

Break

Abstract

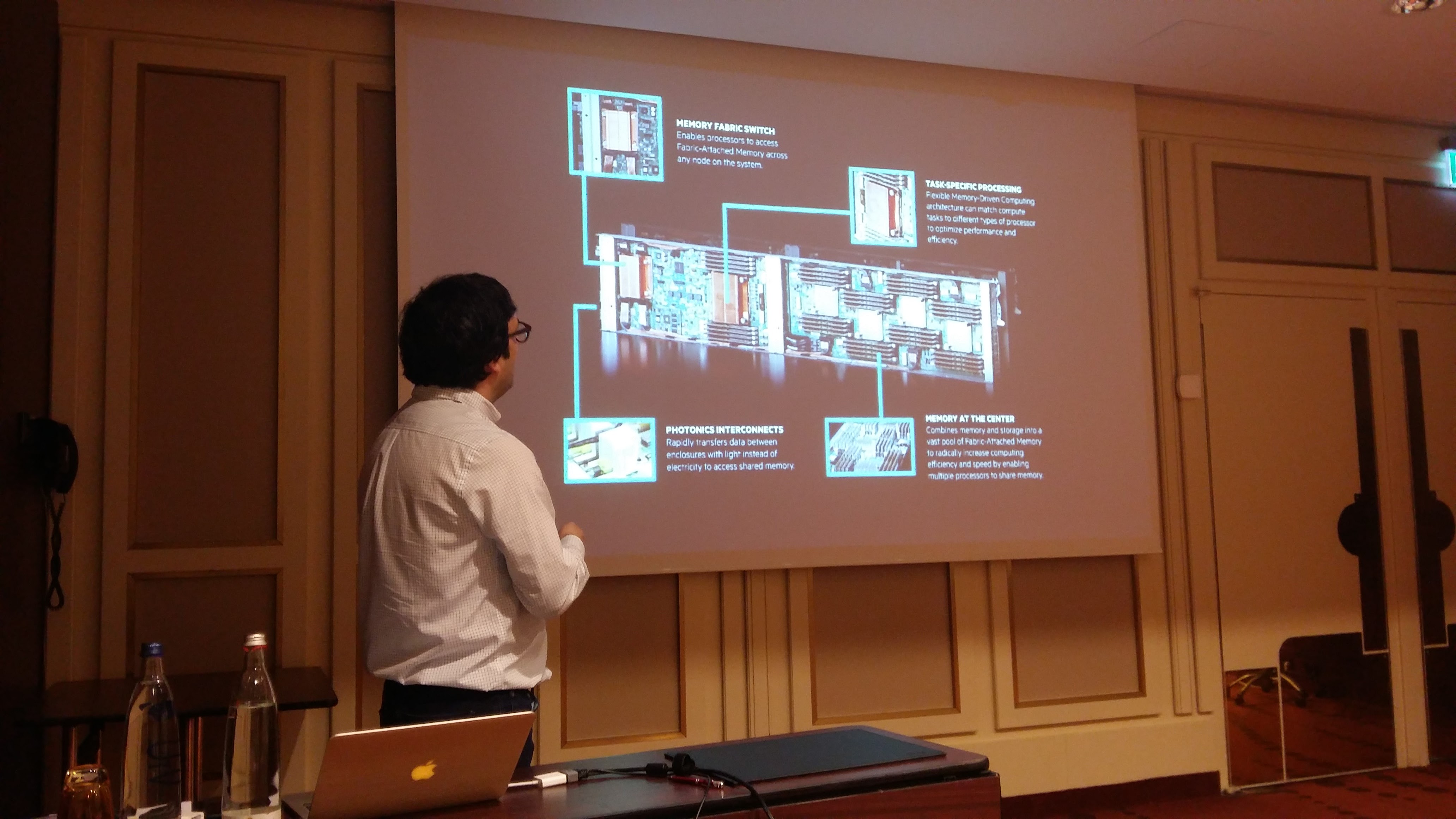

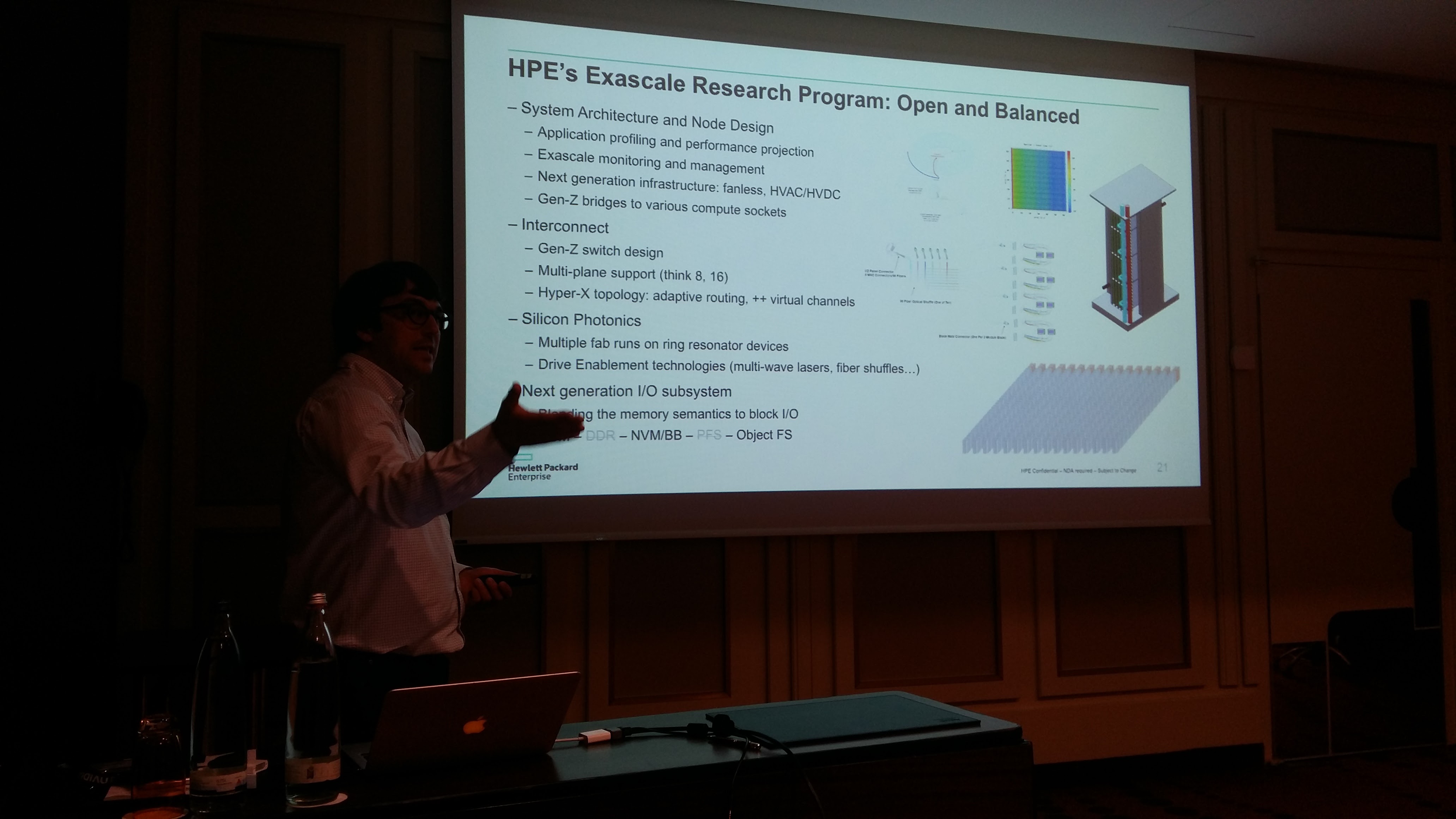

Leading to greater sustained performance, HPE’s architectural approach to Exascale aims to achieves far more balance when measured by key system metrics. We emphasize the pressing issues at extreme scale —communications and memory— rather than peak FLOPS. For the 2020s and beyond, HPE aims to build broadly capable systems that can accelerate a wide range of applications rather than a one-of-kind machine that can hit a Linpack exaflop.

HPE’s processor-independent, modular architecture is based upon an open and universal system protocol for memory and fabrics, Gen-Z, supported by a consortium of thirty companies (www.genzconsortium.org). Combined with the Hyper-X topology that takes advantage of high-radix routers, it enables exascale system interconnects with very few hops. The architecture introduces globally addressable storage based on fabric-attached non-volatile memory. HPE is also working closely with partners to develop Gen-Z enabled silicon with co-packaged high-performance memory, superior interconnect bandwidth, and a comprehensive software and application development stack. HPE’s vision for the exascale software stack is also voluntarily open and broad.

This talk will start by assessing the current technological trends that are impacting negatively current and future leadership HPC architectures. It will then present a forward-looking vision, leveraging an aggressive R&D agenda on system architecture, high-throughput interconnects, silicon photonics and NVM-native I/O subsystems.

Bio

Dr. Nicolas Dubé is the Chief Strategist for High-Performance Computing at Hewlett Packard Enterprise. He is the technical lead of the Advanced Development Team, a “skunkworks” group dedicated to the advancement of core technologies and the redefinition of system architecture for next generation supercomputers. He is also the Chief Architect for Exascale, driving a voluntarily more open and balanced vision. Previously, he lead the Apollo 8000 team as the system architect for NREL’s Peregrine, awarded R&D Awards 2014 “most significant innovation of the year”. Leveraging a combined experience in server and datacenter engineering, Nicolas advocates for a “greener” IT industry, leveraging warm water cooling, heat re-use and carbon neutral energy sources while pushing for dramatically more efficient computing systems.

Abstract

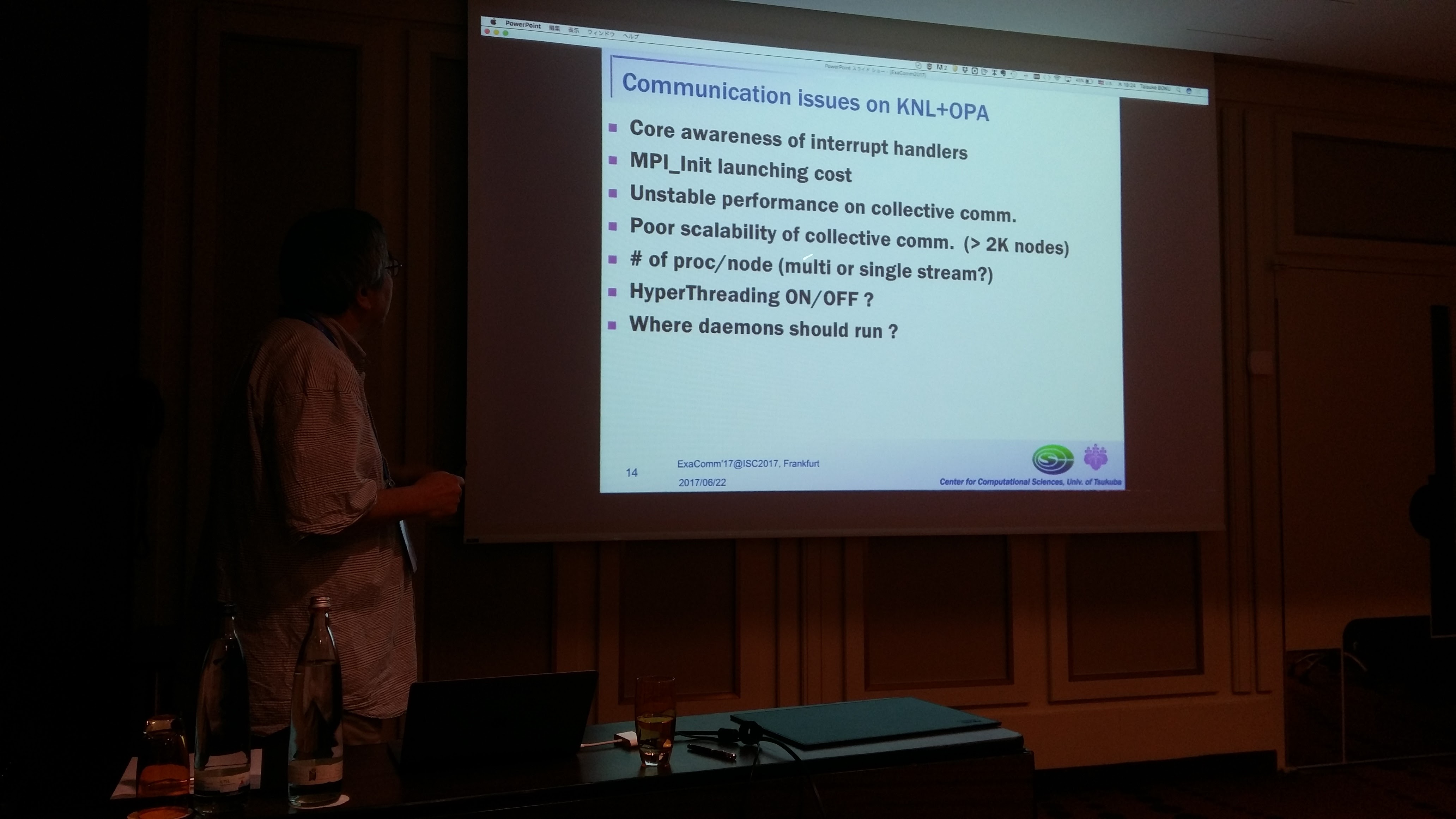

On December 2016, Joint Center for Advanced High Performance Computing (JCAHPC), a joint organization by University of Tsukuba and the University of Tokyo, launched the full system operation of Oakforest-PACS which is the world largest cluster with a coupling of Intel Knights Landing (KNL) CPU and Intel Omni Path Architecture (OPA) to provide 25 PFLOPS of theoretical peak performance. It is ranked at No.6 in TOP500 List on November 2016. The system is built on a number of new challenges such as new many-core architecture, compact implementation, new interconnection technology, high throughput I/O, etc.

In this talk, the overview of Oakforest-PACS system with several fundamental benchmark results on computation and communication as well as I/O performance is introduced. I will also introduce a couple of preliminary results on full-system size of application. Additionally, I will focus on several system issues to support cluter computing which happen on KNL's unique architecture and performance characteristics.

Bio

Taisuke Boku received Master and PhD degrees from Department of Electrical Engineering at Keio University. After his carrier as assistant professor in Department of Physics at Keio University, he joined to Center for Computational Sciences (former Center for Computational Physics) at University of Tsukuba where he is currently the deputy director, the HPC division leader and the system manager of supercomputing resources. He has been working there more than 20 years for HPC system architecture, system software, and performance evaluation on various scientific applications. In these years, he has been playing the central role of system development on CP-PACS (ranked as number one in TOP500 in 1996), FIRST (hybrid cluster with gravity accelerator), PACS-CS (bandwidth-aware cluster) and HA-PACS (high-density GPU cluster) as the representative supercomputers in Japan. From 2016, he has been working as the system operation manager of Oakforest-PACS, the fastest supercomputer in Japan under JCAHPC. He also contributed to the system design of K Computer as a member of architecture design working group in RIKEN and currently a member of system architecuture working group of Post-K Computer development. He is a coauthor of ACM Gordon Bell Prize in 2011.

Abstract

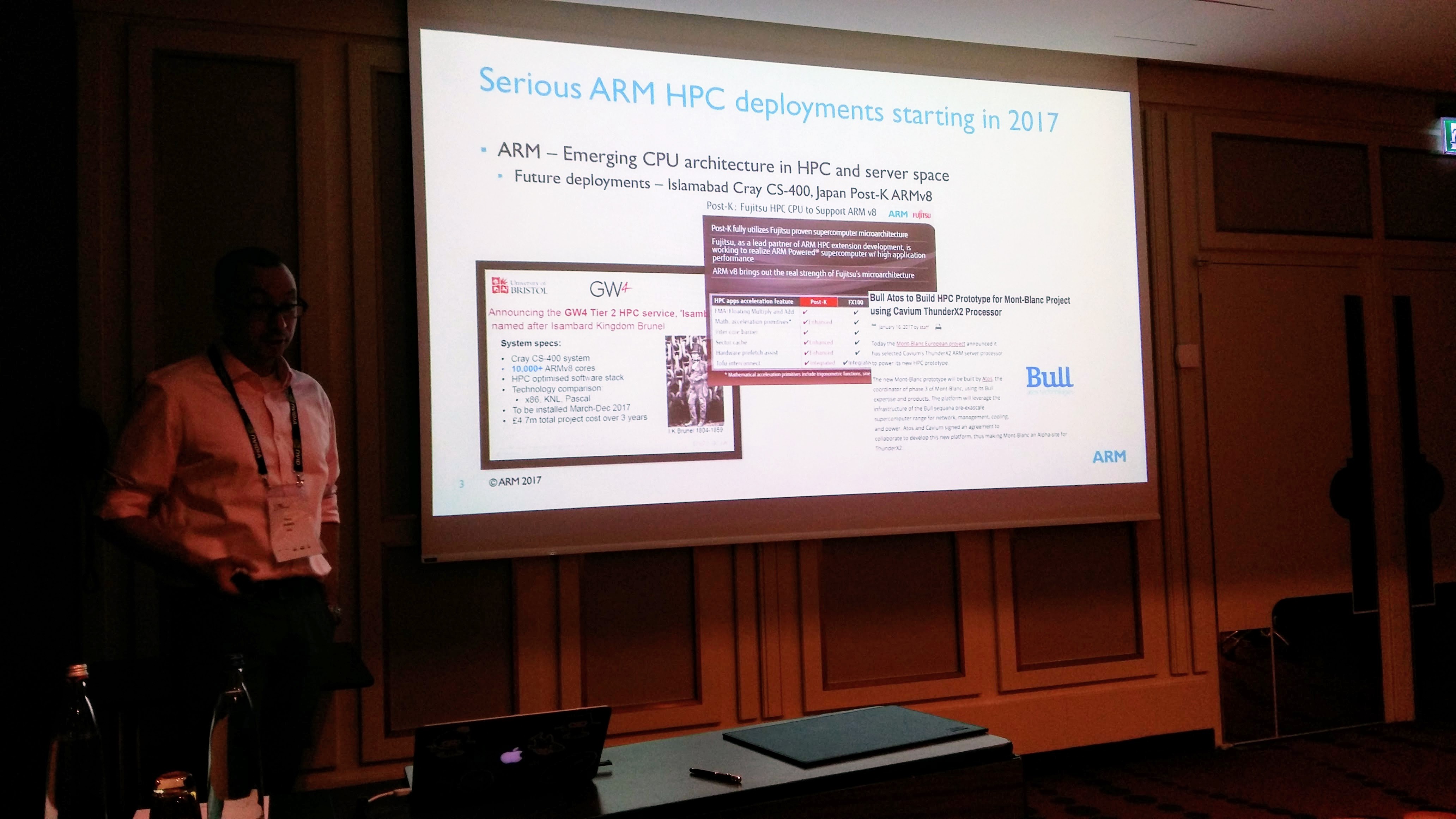

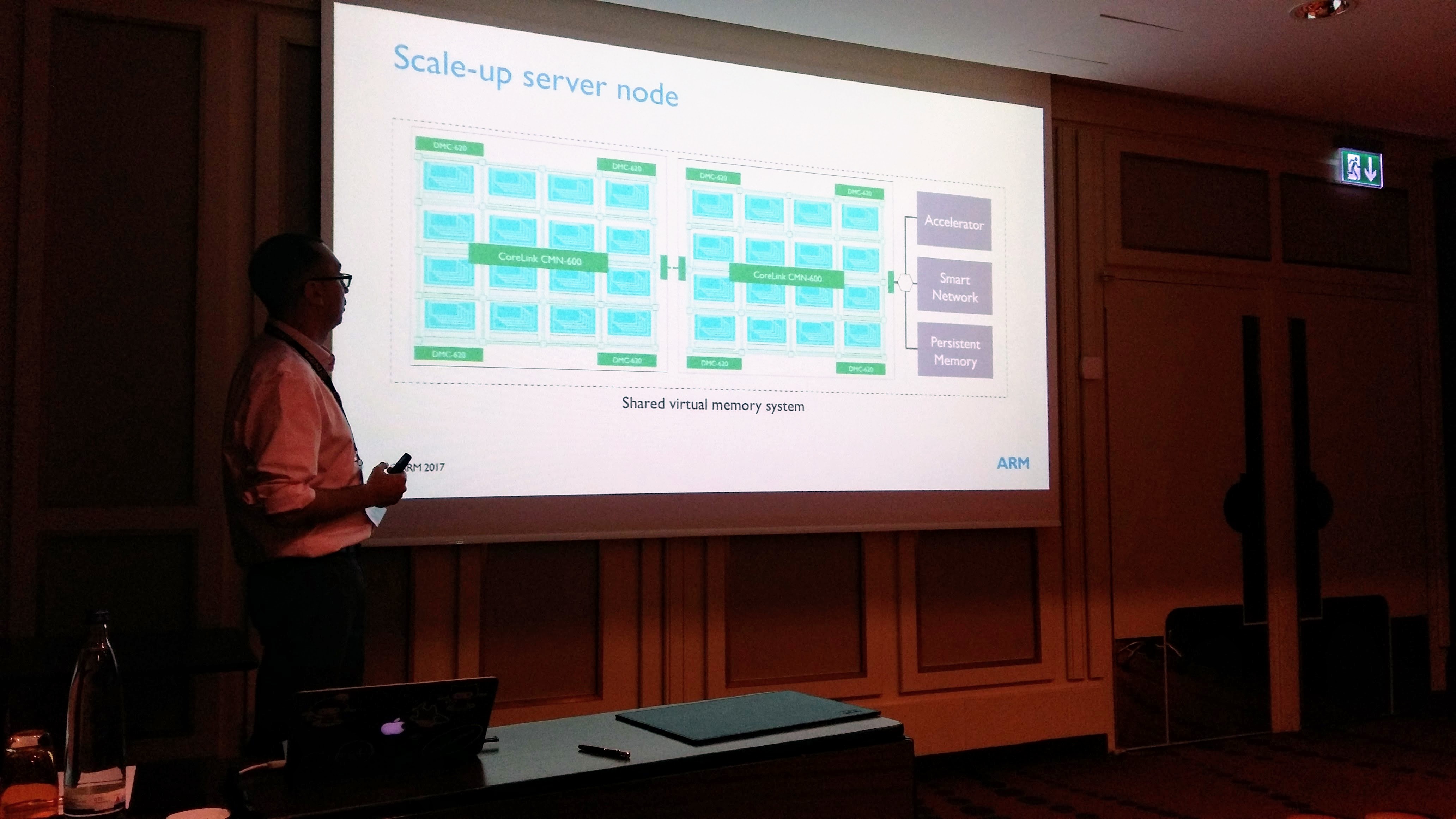

Applications, programming languages, and libraries that leverage sophisticated network hardware capabilities have a natural advantage when used in today’s and tomorrow’s high-performance and data center computer environments. Modern RDMA based network interconnects provides incredibly rich functionality (RDMA, Atomics, OS-bypass, etc.) that enable low-latency and high-bandwidth communication services. The functionality is supported by a variety of interconnect technologies such as InfiniBand, RoCE, iWARP, Intel OPA, Cray’s Aries/Gemini, and others.

Over the last decade, the HPC community has developed variety user/kernel level protocols and libraries that enable a variety of high-performance applications over RDMA interconnects including MPI, SHMEM, UPC, etc.

With the emerging availability HPC solutions based on ARM CPU architecture, it is important to understand how ARM integrates with the RDMA hardware and HPC network software stack. In this talk, we will overview ARM architecture and system software stack. We will discuss how ARM CPU interacts with network devices and accelerators. In addition, we will share our experience in enabling RDMA software stack and one-sided communication libraries (Open UCX, OpenSHMEM/SHMEM) on ARM and share preliminary evaluation results.

Bio

Pavel is a Principal Research Engineer at ARM with over 16 years of experience in development HPC solutions. His work is focused on co-design software and hardware building blocks for high-performance interconnect technologies, development communication middleware and novel programming models. Prior to joining ARM, he spent five years at Oak Ridge National Laboratory (ORNL) as a research scientist at Computer Science and Math Division (CSMD). In this role, Pavel was responsible for research and development multiple projects in high-performance communication domain including: Collective Communication Offload (CORE-Direct & Cheetah), OpenSHMEM, and OpenUCX. Before joining ORNL, Pavel spent ten years at Mellanox Technologies, where he led Mellanox HPC team and was responsible for development HPC software stack, including OFA software stack, OpenMPI, MVAPICH, OpenSHMEM, and other.

Pavel is a recipient of prestigious R&D100 award for his contribution in development of the CORE-Direct collective offload technology. In addition, Pavel has contributed to multiple open specifications (OpenSHMEM, MPI, UCX) and numerous open source projects (MVAPICH, OpenMPI, OpenSHMEM-UH, etc).

1:00 - 2:00

Lunch

Abstract

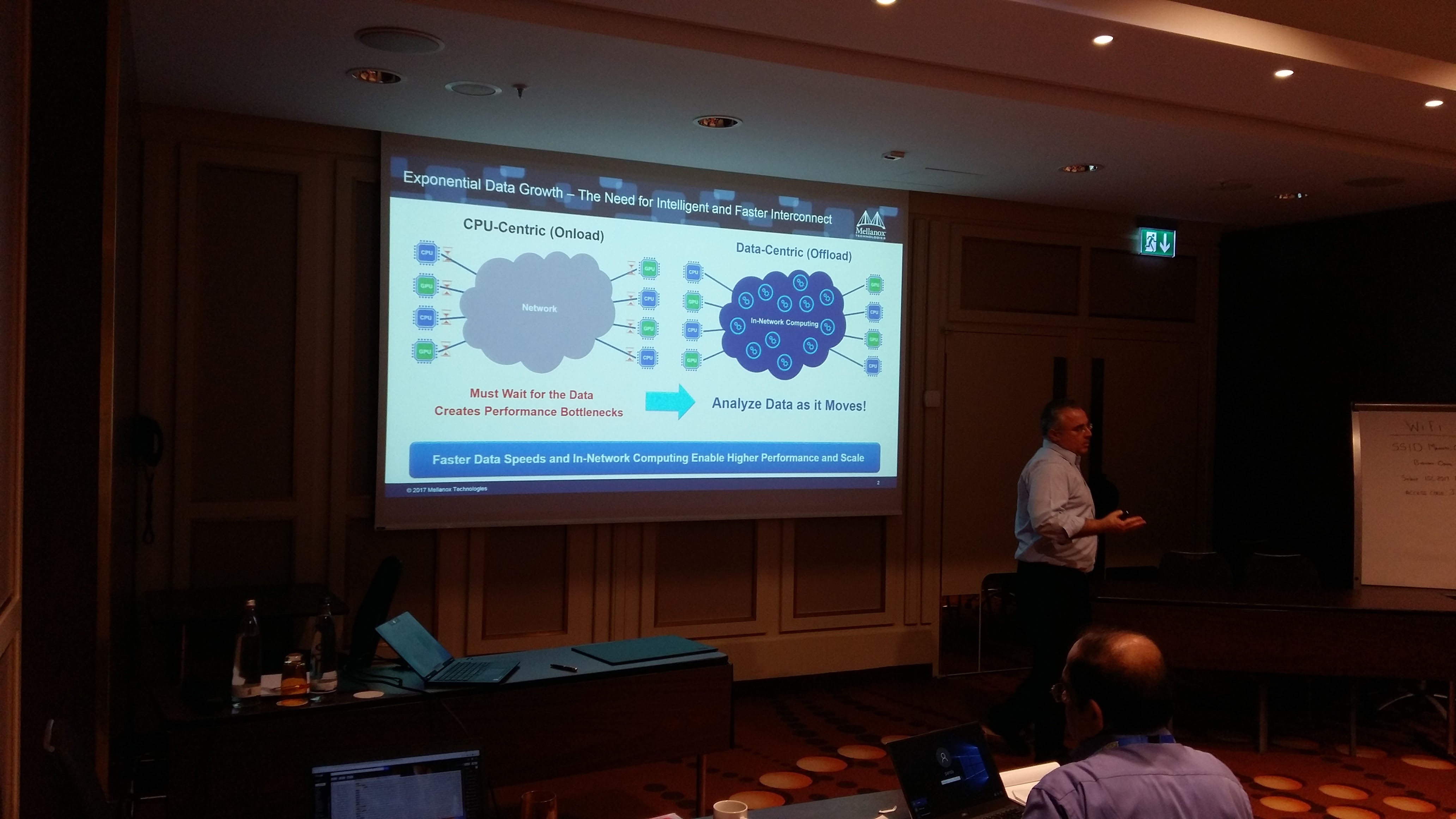

The latest revolution in HPC is the move to a co-design architecture, a collaborative effort among industry thought leaders, academia, and manufacturers to reach Exascale performance by taking a holistic system-level approach to fundamental performance improvements. Co-design architecture exploits system efficiency and optimizes performance by creating synergies between the hardware and the software, and between the different hardware elements within the data center. Co-design recognizes that the CPU has reached the limits of its scalability, and offers an intelligent network as the new “co-processor” to share the responsibility for handling and accelerating application workloads. By placing data-related algorithms on an intelligent network, we can dramatically improve data center and applications performance.

Smart interconnect solutions are based on an offloading architecture, which can offload all network functions from the CPU to the network, freeing CPU cycles and increasing the system’s efficiency. With the new efforts in the co-design approach, the interconnect will include more and more data algorithms that will be managed and executed within the network, allowing users to run data algorithms on the data as the data being transferred within the system interconnect, rather than waiting for the data to reach the CPU. The future interconnect will deliver In-Network Computing and In-Network Memory, which is the leading approach to achieve performance and scalability for Exascale systems.

Bio

Gilad Shainer has served as Mellanox's vice president of marketing since March 2013. Previously, Mr. Shainer was Mellanox's vice president of marketing development from March 2012 to March 2013. Mr. Shainer joined Mellanox in 2001 as a design engineer and later served in senior marketing management roles between July 2005 and February 2012. Mr. Shainer serves as the chairman of the HPC Advisory Council organization, he serves as a board member in the OpenPOWER, CCIX, OpenCAPI and UCF organizations, a member of IBTA and contributor to the PCISIG PCI-X and PCIe specifications. Mr. Shainer holds multiple patents in the field of high-speed networking. He is also a recipient of 2015 R&D100 award for his contribution to the CORE-Direct collective offload technology. Gilad Shainer holds a MSc degree and a BSc degree in Electrical Engineering from the Technion Institute of Technology in Israel.

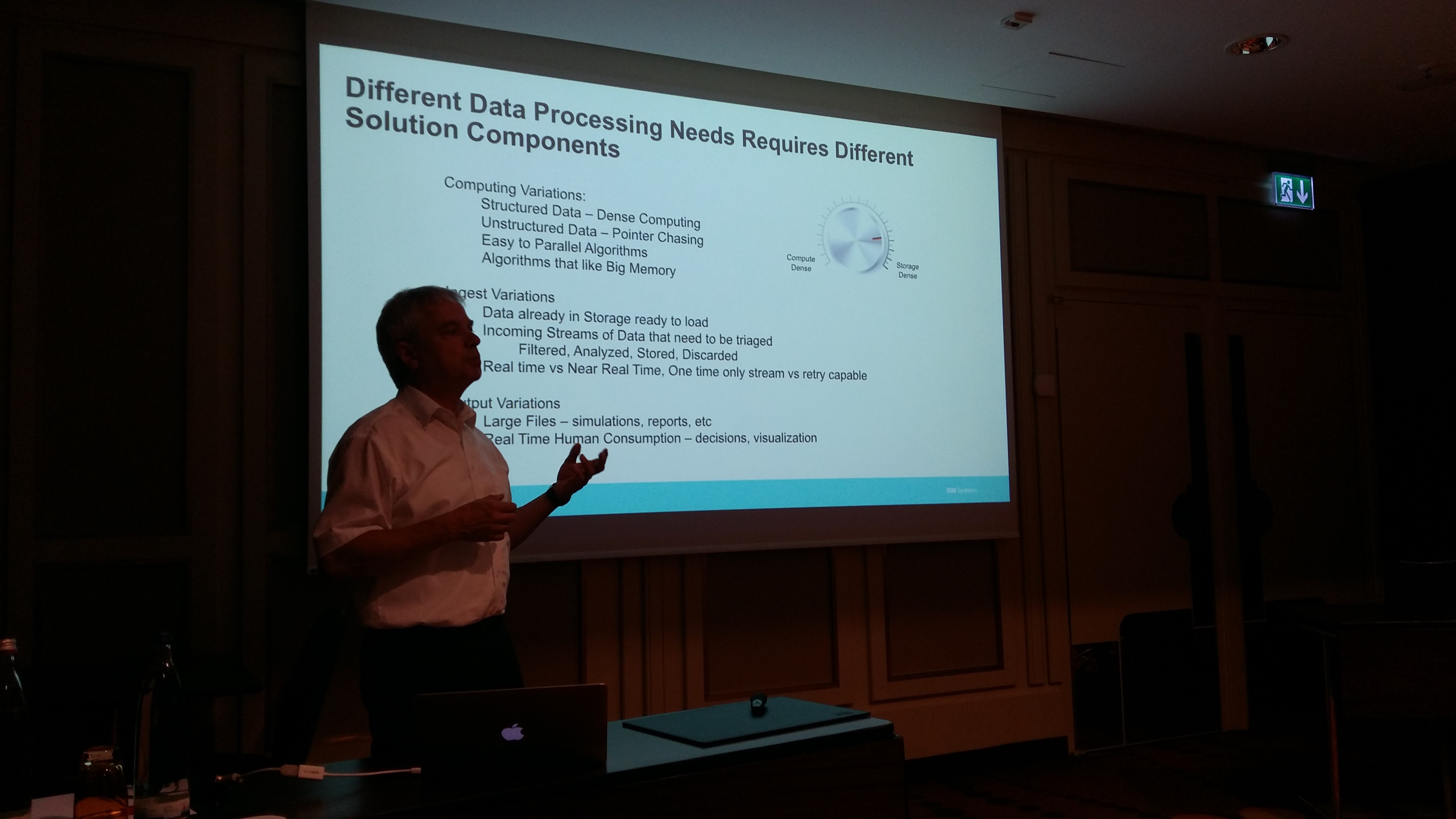

Abstract

Parallel programming strongly depends on fast communication. That includes network message exchange as well as communication between CPU and accelerators. Fast communication is a prerequisite and enablement for application performance. But current system interfaces seem to be at their limits. NVIDIA’s NVLink and the new OpenCAPI standard creates innovation here. The talk explains how this will influence parallel systems architectures.

Bio

Klaus Gottschalk is HPC- and machine learning architect at IBM. After he graduated with major in Mathematics he joined IBM in 1992 as HPC engineer. He is focusing on parallel system architecture, data analytics, machine learning and system sizing. Klaus is well connected with HPC- and AI customers in Europe and worldwide and is an expert in large system design.

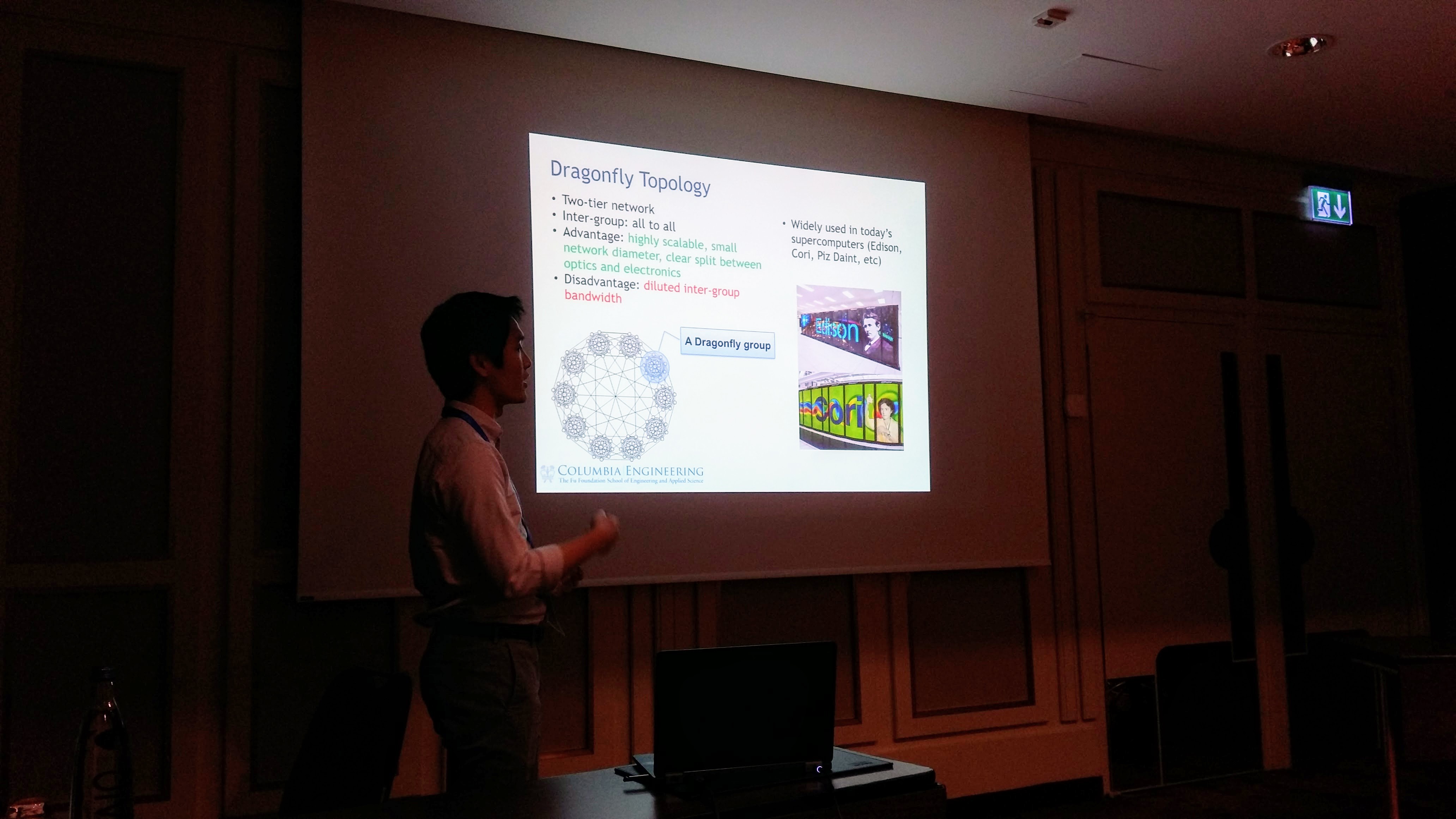

[Best Paper] Design Space Exploration of the Dragonfly Topology

Min Yee Teh1, Jeremiah J. Wilke2, Keren Bergman1, and Sébastien Rumley1

1Columbia University, 2Sandia National Laboratories

Abstract

We investigate all possible options to create a Dragonfly topology able to accommodate a specified number of end-points. We first observe that any Dragonfly topology can described with two main parameters, imbalance and density, dictating the distribution of routers in groups, and the inter-group connectivity, respectively. We introduce an algorithm taking the desired number of end-points and these two parameters as input, and delivering a topology as output. We calculate a variety of metrics on generated topologies resulting from a a large set of parameter combinations. Based on these metrics, we isolate the subset of topologies that present the best economical and performance trade-off. We conclude by summarizing guidelines for Dragonfly topology design and dimensioning.

Bio

Min Yee Teh is currently a graduate student at Columbia University's Lightwave Research Laboratory, under the supervision of Professor Keren Bergman. His current research focus is on the modelling and simulating of optical interconnect networks in high performance computing. In particular, his research interest lies in the integration of photonics into modern computing platforms to boost performance and power consumption. Prior to joining Columbia Engineering, he graduated with a degree in Electrical Engineering from Brown University in the year 2016. Shortly before graduating from his undergraduate studies, he interned at Analog Devices' Automotive MEMS team, during which he worked on MEMS gyroscopes and accelerometers.

High-throughput Sockets over RDMA for the Intel Xeon Phi Coprocessor

Aram Santogidis1 and Spyros Lalis2

1Maynooth University, Ireland and CERN, 2University of Thessaly, Greece

Abstract

In this paper we describe the design, implementation and performance of Trans4SCIF, a user-level socket-like transport library for the Intel Xeon Phi coprocessor. The Trans4SCIF library is primarily intended for high-throughput applications. Internally, it uses RDMA transfers over the native SCIF support, but does this in a way that is transparent for the application which has the illusion of using conventional stream sockets. Furthermore, we discuss the integration of Trans4SCIF with the ZeroMQ messaging library, which is used extensively by several applications running at CERN, and show that this can lead to a substantial, up to 3x, increase of application throughput compared to the default TCP/IP transport option.

Bio

Aram Santogidis received his Diploma in Computer & Communications Engineering at the University of Thessaly. As a member of the .Intel-CERN European Doctorate Industrial Program" he is currently conducting Ph.D studies at Maynooth University and CERN on the topic of "Data transfer aspects of manycore processors for high-throughput applications".

4:00 - 4:30

Break

Abstract

In that talk we will briefly review the trends for future designs, starting from the core and up to the node and full system, from the hardware and programming model standpoints. In practice, we have to deal with some physical limits when it comes to inter or intra communication and interconnections, usually expressed in terms of latency and bandwidth. One extra dimension is of course that any new technologies would have to meet the requirements of the HPC applications. For that purpose, we will discuss how we can model real word applications behaviors and how to work around the fact that merging the information coming from cycle accurate simulators and from large scale simulation data is not an easy task, keeping in mind that the physics (equations) and the test case (field data) will also keep growing.

Bio

Philippe Thierry is Principal Engineer at Intel within the co-design and pathfinding group. His main focus is on real application characterization and performance modeling by using high level methodology and uncertainties quantification to validate impact of any future designs on application behaviors. Prior to that pathfinding activities, Philippe led the Intel Energy Engineering Team mostly focusing on Oil and gas applications. His work included profiling and tuning of HPC applications for current and future platforms. Philippe obtained a Ph-d in Geophysics on seismic imaging from Paris School of Mines, France, where he has spent several years working on 3D prestack depth migration and High Performance Computing. Philippe also worked at SGI as benchmarking team leader before joining Intel Corp in Paris area.

Panel Members

Ron Brightwell, R&D Manager, Scalable System Software, Sandia National Laboratories

Ada Gavrilovska, Associate Professor, Georgia Tech

Mitch Gusat, Researcher, IBM Zurich

Richard Graham, Senior Solutions Architect, Mellanox Technologies